The Importance of a robots.txt File

- By OCX Team

- In How to ...

- Apr 11, 2017

Some customers asked our engineers why their site seems slow and generates very high “load”.

Quick answer: This happens because search engines index each page on their site hundreds of different ways, following every possible link to search, sort, and filter the results.

One of this pages is category page where visitor/customer has option for sort order, limit etc.

E.g: yourstore.com/index.php?route=product/category&path=x&sort=pd.name&order=ASC

The extra requests from the search engine indexers can cause hundreds of times as many requests as visitors do.

yourstore.com/index.php?route=product/category&path=x&sort=p.price&order=ASC

yourstore.com/index.php?route=product/category&path=x&sort=p.price&order=DESC

yourstore.com/index.php?route=product/category&path=x&sort=rating&order=DESC

....

Since all the links are virtually identical, and since you only want each search engine to see one version of each page to avoid “diluting” your rankings, you should use a robots.txt file to prevent search engines from indexing the extra versions.

You might be surprised to hear that one small text file, known as robots.txt, could be the downfall of your website.

If you get the file wrong you could end up telling search engine robots not to crawl your site, meaning your web pages won’t appear in the search results.

Therefore, it’s important that you understand the purpose of a robots.txt file and learn how to check you’re using it correctly.

What is robots.txt?

A robots.txt file is a file at the root of your site that indicates some parts of your site you don’t want accessed by search engine crawlers.

Robots.txt should only be used to control crawling traffic, typically because you don't want your server to be overwhelmed by Google's crawler or to waste crawl budget crawling unimportant or similar pages on your site.

How to create robots.txt file?

Writing a robots.txt is an easy process. Follow these simple steps:

- Open any text editor you want (Notepad, Notepad++, Sublime, Atom) and save the file as ‘robots,’ all lowercase, making sure to choose .txt as the file type extension

Let's learn first the basics:

- Next, add the following two lines of text to your file:

User-agent: *

Disallow:

'User-agent' is another word for robots or search engine spiders. The asterisk (*) denotes that this line applies to all of the spiders.

Here, there is no file or folder listed in the Disallow line, implying that every directory on your site may be accessed (basic robots text file).

If you’d like to block the spiders from certain areas of your store, your robots.txt might look something like this:

User-agent: *

Disallow: /something/

Disallow: /something2/

The above three lines tells all robots that they are not allowed to access anything in the "something" and "something2" directories or sub-directories.

Keep in mind that only one file or folder can be used per Disallow line. You may add as many Disallow lines as you need.

Ok, Ok, but in particular for OpenCart?

Here it is

Download robots.txt example file

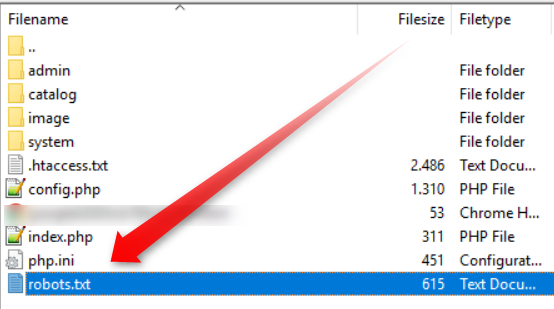

Where i have to add this robots.txt file?

Using FTP Client like Filezilla upload robots.txt file to root of your store